Last Updated on October 3, 2024 by mike

TL;DR: In this recap of IOH #171, follow along as indexers share early testing results from applying the account-like flag for performance, what’s coming for indexer-agent, and progress on a subgraph composition feature, plus tips for removing chains from your configuration and managing chain data.

Opening remarks

Hello everyone, and welcome to episode 171 of Indexer Office Hours!

GRTiQ 182

Catch the GRTiQ Podcast with Emily Lin, Developer Relations at Scroll, an innovative layer-2 scaling solution for Ethereum. Emily shares her journey into the tech and web3 space, including experiences working at various tech companies like ConsenSys.

Discover Emily’s perspective on why The Graph is important to her work.

Repo watch

The latest updates to important repositories

Execution Layer Clients

- Erigon New release v20.60.6 :

- The release includes improvements in stateDiff encoding compatibility with Geth, enhanced callTracer functionality, and a bug fix for missing blobs during backfilling.

- The GraphOps team has tested this release on their testnet.

- Nethermind: New release v1.28.0

- eth_simulate is a new JSON-RPC method that expands on eth_call ideas allowing simulation (mocking) of multiple blocks with multiple transactions.

- Optimism adjustments and fixes.

- Gnosis and Chiado snap sync enabled by default.

- Arbitrum-nitro New release v3.1.1-beta.3 :

- This release introduces various validation fixes and a Docker image with updated default flags.

- Key changes include configuration updates for string values in JSON to arrays, compatibility fixes for split validation, and removal of the default Nitro validation RPC request size limit.

- Additionally, there are internal updates like running batches through the inbox multiplexer before posting and updating the pinned Rust version in the Dockerfile.

- Stylus validation remains incompatible with previous databases, with a workaround provided for Stylus-enabled chains.

Launchpad Stack

- New chart versions released with enhanced features and bug fixes:

- Proxyd

- Erigon

- Nimbus

- Lighthouse

- We’ve improved all of our charts using P2P to retry fetching externalIP if it fails the first time: Subgraph-Radio, Celo, Erigon, Lighthouse, Listener-Radio, Nethermind, Nimbus

- New stable versions of Ethereum, Gnosis, Polygon, Arbitrum, Celo, Monitoring, Graph

Issues:

- Launchpad charts issues: View or report issues

- Launchpad namespaces issues: View or report issues

Matthew Darwin | Pinax in the chat: You do something special to get external IPs? (in Launchpad)

Ana | GraphOps: Yes, we have an init container in Kubernetes for those resources that run P2P. If your Kubernetes cluster exposes external IPs via the nodes, it’s a straightforward command that runs to report that to then update the P2P service. If that’s not available, then we have a different command that we run, so we’ve improved that mechanism.

Matthew: Ok, so you don’t let the P2P self-discover? (we can take this offline)

Ana: There is the P2P self-discovery as well, but you still need the external IP. Yes, let’s take this offline for more detail.

Example: launchpad-charts/charts/celo/templates/celo/statefulset.yaml

Protocol watch

Contracts Repository

- fix: revert when delegating, undelegating or withdrawing from an invalid delegation pool (OZ M-06) #999 (open)

- fix: add PaymentsEscrow functions for collector tracking (OZ M-04) #996 (closed)

- fix: slashing reverts when exceeding provision balance (OZ M-05) #997 (open)

- fix: early return in Staking withdraw (OZ M-03) #994 (open)

- fix: remove maxTokensSlash from DisputeManager (OZ M-02) #995 (open)

- fix: service provider forcing revert when slashing (OZ H-02) #998 (open)

- fix: resize only open allocations and access control (OZ C-04, C-05 and M-01) #992 (open)

- fix: subgraphService access control (OZ C-04) #990 (open)

- fix: addToDelegationPool token transfer (OZ C-01 and C-02) #988 (open)

- fix: change pending rewards due to resize to token amounts instead of per token (OZ H-01) #993 (open)

- fix: update subgraph endpoints to network and some linting #991 (open)

Open discussion

This week, we wanted to have more time for questions raised by Derek’s PostgreSQL setup demo from last week and any updates from testing that has been done since then.

Review last week’s discussion in The Graph Indexer Office Hours #170.

Note: Comments have been lightly edited and condensed.

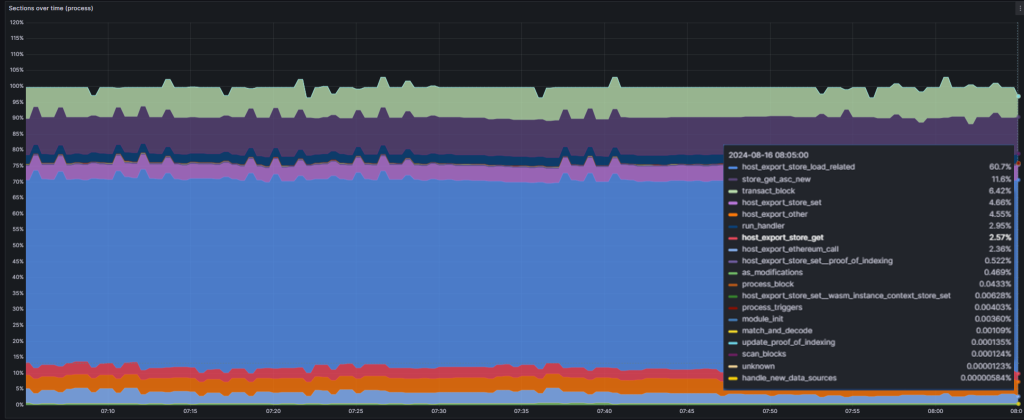

Testing turning on account-like for tables [9:36]

Jim | Wavefive: Derek and I tested account-like for tables late last week. Using account-like is low hanging fruit in terms of potential performance gains for very little effort. We ran some queries to identify tables that matched the profile that Derek has had good experience with adding the account-like flag to.

We ran Derek’s query on my legacy database, my monolithic database, and there were a lot of tables that were reported as meeting the criteria and worth adding the account-like flag.

We’re monitoring the data now and there are early signs that it’s definitely improving performance—not a radical improvement because I already had that turned on for a lot of the subgraphs I run that form the basis for most of my traffic, but definite improvements in latency or processing time for returning queries on those tables. We need to continue gathering data and see if there’s a long-term effect.

Derek | Data Nexus: What we’re trying to do here is look at what’s in a database that looks like it’s bloated and could potentially use some optimization. But without regard to how much of your server’s consumption it’s actually using. So as Jim said, we found 182 tables that were probable candidates for applying the account-like flag because they’ve got greater than 3 million records and a really high version to entity count. These are arbitrarily inserted numbers and they may not be the sweet spot, so we want to test it out and figure out at what point does the bloat really matter?

Is it closer to 5 million records, is it 10 million, or is it 1 million?

We’re still testing and will share the results, but so far we’re seeing a general performance enhancement. But again, we don’t know of those 182 tables, maybe only 30 of them are actually being used in queries? Maybe that subgraph is being queried but that table specifically in the subgraph is not being queried.

There are no storage increases to apply this flag.

Payne | StakeSquid: Should we build a script that parses all the results from graphman stats show?

Derek: Yeah, that’s essentially what this query is doing. It’s using that same graphman stats show, and opening it up to all subgraphs on the database and then limiting it to those that have greater than 3 million records and a ratio of less than 0.1%.

Here’s the query. I wouldn’t say go wild with this because we’re still testing it, but that’s the same query as the graphman stats show.

select s.schemaname as schema_name, c.relname as table_name

from pg_namespace n, pg_class c, pg_stats s

join subgraphs.subgraph_deployment sd on sd.id::text = substring(s.schemaname,4,length(s.schemaname))

left join subgraphs.table_stats ts on ts.deployment = sd.id and s.tablename = ts.table_name

where c.relnamespace = n.oid

and s.schemaname = n.nspname

and c.relname not in ('poi2$','data_sources$')

and s.attname = 'id'

and c.relname = s.tablename

and c.reltuples::int8 > 3000000

and coalesce(ts.is_account_like,false) = false

and case when c.reltuples = 0 then 0::float8

when s.n_distinct < 0 then (-s.n_distinct)::float8

else greatest(s.n_distinct, 1)::float8 / c.reltuples::float8 end < 0.001

order by s.schemaname, c.relname;

Stake🦑Squid Scripts [18:57]

Jim | Wavefive: I’ve built a new stack that can scale horizontally and now I’m trying to move from my legacy stack to my new one. I’ve given up on the idea of migrating subgraphs for now as it’s quite complex and risky. I’ve decided I’m going to sync them all.

So what I want to do is take out all of the subgraphs that I’m currently allocated to. I want to put them in a script that will hunt for all the grafts I need for those subgraphs, and then I want to write a simple script that adds all of those subgraphs. This includes the subgraphs I’m allocated to plus all the grafts that are required for my new deployment. Then I will sync them all over the next couple of weeks.

Payne’s written a set of complex scripts that can do something along those lines but I don’t fully understand exactly how they work. The Script collects all of the subgraphs on the network. It filters by signal I think, and whether or not the subgraph has rewards. Should I link it, Payne, or is it out of date?

Payne: It should still work. I’m going to do a pass on all my scripts and repost everything. I will make a public repo.

Jim posted the Discord link to his questions about the scripts: indexer-software channel in The Graph’s Discord server

Payne: The script basically does the following: so the first scan one it’s basically pulling the list of all the subgraphs on the network and stores them somewhere in the memory. Then through this list, it then queries the network subgraph to get the manifests of those subgraphs, and within those manifest files, you get the first level of a graft, only because I don’t want to overload IPFS too much. With the first list of the grafts, it then queries again but this time on the IPFS because it cannot find them in the network subgraph anymore, so it queries IPFS for each individual of those subgraphs to figure out if there’s more chains of grafts.

I have another one that gives you a table, which is a better visualization of the data.

I also have a separate script that you can feed a manual list of subgraphs.

WIP: automatic graft handling support in indexer-agent

Meanwhile, in the chat:

Ford: Speaking of graft dependency handling, automatic graft handling support in indexer-agent is WIP now.

Jim: That’s great to hear, Ford. I’m so desperate for that functionality right now.

Colin | StreamingFast: Ford, will the dependency handling work for when we remove a subgraph (i.e., removes all grafts)?

- Ford: Not sure specifics, but I think yes.

Ford: In progress, make your suggestions now or forever hold your peace! (jk, but now is good time to affect feature).

Vince | Nodeify: wen 1 graft max enforced?

- Ford: I was pushing for that, but got halted as many big users on the network would have their current subgraph dev workflows broken by that limit. We’ll need to do some other work to prepare for that.

Removing chains [25:43]

Jim | Wavefive: How are you managing adding and removing chains from your configuration? Or do you even remove them?

Vincent | Data Nexus: You can remove chains via graphman, but it requires removing all subgraphs that index the chain you are trying to remove.

Vince | Nodeify posted in the chat:

Usage: graphman --config <CONFIG> chain <COMMAND>

Commands:

list List all chains that are in the database

info Show inforation about a chain

remove Remove a chain and all its data

check-blocks Compares cached blocks with fresh ones and clears the block cache when they differ

truncate Truncates the whole block cache for the given chain

change-shard Change the block cache shard for a chain

call-cache Execute operations on call cache

help Print this message or the help of the given subcommand(s)

Slimchance: graphman chain call-cache remove <CHAIN ID>

graphman chain remove <CHAIN ID> #This also deletes the block cache

You can’t do remove if you have subgraphs deployed on the chain. I tested it. You get a very clear error message. If I remember correctly, something like, “There are 8 deployments using this network.”

Hau | Pinax: graphman chain truncate <chain>

Vincent | Data Nexus: I think truncate just deletes all of the data but keeps the chain.

Processing grafts [29:22]

Derek | Data Nexus posted: One thing that would be nice is if the prior deployment “morphed” into the new deployment. Rather than persisting the old deployment in parallel to the new deployment. Could we just update the name/deploymentId of the subgraph rather than having to copy all of the state to a second place in the db?

Vincent | Data Nexus posted: This would probably have to be implemented as a graph-node feature.

Ford: Yeah, that’s something we’ve talked about with the Graph Node engineers. I think there are a few tricky things and it seems simpler on the surface than it is, but it’s definitely doable. There are a few cases where you might want to have the data more clean and if a base dependency is used for multiple graft subgraphs, but that doesn’t really happen that often. So, I think it’s working through a few determinism issues and making sure we can stop a subgraph in a clean state and then start it again with the new code.

WIP: subgraph composition feature [31:44]

Ford: We’re working on a subgraph composition feature. It’s still experimental. A subgraph could rely on another subgraph as a data source instead of a pure graft. So that could help us get better reuse of data where you have one subgraph that many others depend on in more of a clean way and not having to copy data around everywhere.

Jim | Wavefive: Am I going to to use less disk space as an indexer?

Ford: It’s index time composition, so you can think of other forms of composition where maybe you have multiple subgraphs that you can compose across at query time, and they live in their separate schemas and tables, and then at query time, you join them. That would be a little different.

This is in your subgraph manifest, where right now you define data sources and they’re typically a contract address and some events that you’re listening to. This would offer a new type of data source where instead of it being an external contract, it’s basically a subgraph you already have in your database. So when the dependent subgraph is syncing, it’s basically sending queries to the database instead of using Firehose, Substreams, or RPC.

That doesn’t mean you’re going to get huge storage improvements immediately, but if we get this well used on the network and we get to a place where we have a handful of core subgraphs with a lot of data in them that’s simple and raw, like maybe just decoded data that many other subgraphs then rely on, you could see a place where many users are relying on the same base subgraphs, and then as an indexer, you would have much less repeated data because you’d have one subgraph that many rely on for that core data instead of the core data being repeated across a bunch of subgraphs.

Slimchance posted: So the data could live on multiple subgraphs. I am glad we have BuildersDAO to help developers use this for good and not for evil. 😅

Derek | Data Nexus: Is the thought with the subgraph data sources that they will have their own triggers, like their own handlers, or is it just something that can be referenced while another event handler is firing?

Ford: They would have their own handlers, but instead of the data that’s feeding into those handlers coming from an external source, it would be coming from the database. The input to your mapping handler would be like the entities in your database.

Derek: So when entity changes occur or something, that could then trigger something downstream on the data source.

Ford: Exactly. Right now, the approach is basically block by block, so it’s kind of like a block handler, but it will only be if there are actual entity changes. So every time there’s a block with entity changes, it would gather all changes for that block.

Chain data management [40:53]

Ford: On the topic of chain data management, would a graphman command to remove all subgraph deployments for a specific chain be useful often? Or maybe just once in a blue moon?

We’re working on a command to rewind all subgraphs related to a specific chain. This could be super useful if there is an event where maybe you realized your provider RPC or Firehose missed some data for a specific chain and you want to rewind all your subgraphs on that chain.

- Derek | Data Nexus: Mass rewind would be very nice.

- Matthew Darwin | Pinax: Yes, good feature!

- Vince | Nodeify: Yes, please.

- Colin | StreamingFast: Rarely but still useful when needed.

- Vincent | Data Nexus: Yeah, that one I’d probably use only when a testnet is deprecated.

- Slimchance: InfraDAO does this multiple times a week, but we have scripts. 😅 Always nice to have the option to delete all though.

- Matthew Darwin | Pinax: For the rewind all subgraphs, can they be not all done at the same time (e.g., schedule only a few at a time, maybe across shards)?

- Ford: I believe the approach we’re taking is to do them in sequence. One at a time.

- Matthew Darwin | Pinax: That’s fine. Was concerned about overloading the database.

Silo Finance Arbitrum subgraph [44:25]

There was an issue with the Silo Finance Arbitrum subgraph, but it has been fixed.

Do we need subgraph police to make sure that people don’t deploy sub-par subgraphs to the network?

Derek | Data Nexus: I built this subgraph, so I’m the subgraph deployer and I’m making everyone feel the pain. When you’re going through the subgraph development stage, you have to think about how it’s going to scale, and as we were building it, they wanted very specific data points, and I was either going to go through a calculus course in order to apply the crazy interest rate logic in the subgraph mappings or I was going to make an RPC. I knew that then the RPC was going to be fine, but it would not scale well, and they said they didn’t care about scalability.

We reached the point where the subgraph reached its limitations on how well it can scale back when they had 6 markets, and now they’ve got 40 markets across 3 or 4 different chains, and so our servers aren’t keeping up with this, and we need to dial it down a lot.

We do have another deployment that we’re currently syncing. Right now we’re at 500 blocks a minute on Arbitrum. Arbitrum produces 150 blocks a minute, so it’s not progressing super rapidly but it reduces the overall RPC load by quite a bit.

It’ll still need to go through data testing once we get to the actual chain head.

Ford: declarative eth_calls?

Derek: Yeah, declarative eth calls would be so much better except that we don’t have the ability to input hardcoded values.

Mack: declarative eth_calls are hot.. make RPC intensive handlers much more performant (though there are limitations – you can only use event params) and… that’s why we need declarative entity loads (nudge).

GIP coming soon

Ford: Hard to do that out of band though (ahead of time).

Mack: Totally – but there is reason enough to start figuring out when it could work.

Subgraph developer incentives for quality [53:37]

What incentives could we put in place to encourage developers to deploy high quality and optimized subgraphs?

- Matthew Darwin | Pinax: Not sure how indexers can express “crappy subgraph-ness” as part of the protocol. Awesome examples will help new subgraph development.

- Vincent | Data Nexus: Right now, it’s probably just cost models and making the crappy subgraphs pay.

- Mack: Time for reputation to make its way into subgraph owners/deployers?

- AbelsAbstracts.eth | GraphOps: Yeah, I was thinking about BuildersDAO as a useful community to help here.

- Matthew Darwin: “BuildersDAO Approved”(tm)

- Ford: Enabling the community to help each other, and to be incentivized to help each other would be a way to help community of diverse data developers scale.

- Mack: Quickly incorporating subgraph auditing service off the back off this convo. Derek, we can offer free subgraph audits for a limited time.

- Mack: 100% & on GitHub.

- Slimchance: Have a button that allows you to pay for 60 min subgraph consulting right in the studio – paid by the same wallet.

- Matthew Darwin: At least we could put a badge on Explorer.

- Mack: And if they have this badge – they get some free perk like 100k queries.

- Derek | Data Nexus: I like this idea. Monthly drops to Builder Reviewed subgraphs.

- Josh Kauffman | StreamingFast.io: Free curation of some amount if the subgraph can be synced in under [SOME AMOUNT OF TIME THAT IS REASONABLE].

Don’t miss next week’s session! The new DBA and Lutter from Edge & Node are coming to talk about Postgres.

No Comments