TL;DR: In this recap of IOH #166, Matthew from Pinax provides helpful context for important steps to take for the Firehose Ethereum 2.6.5 release. The indexer community shares tools and tips on many topics, including cleaning up old deployments, determining how long a subgraph may take to sync, and scaling up operations to support the growing network.

Opening remarks

Hello everyone, and welcome to episode 166 of Indexer Office Hours!

GRTiQ 177

Catch the GRTiQ Podcast with Eliott Teissonniere, co-founder and CTO at Nodle, a web3 network that harnesses smartphones as nodes to establish a digital trust network for societal benefit.

Repo watch

The latest updates to important repositories

Execution Layer Clients

- Geth New release v1.14.7 :

- This is a hot-fix release for a bug that affects only the previous release. Users of v1.14.6 are kindly requested to update.

- sfeth/fireeth: New releases v2.6.4 :

- Substreams bumped to v1.9.0

- Important Substreams bug fix

- Fixes a bug introduced in v1.6.0 that could result in a corrupted store “state” file if all the “outputs” were already cached for a module in a given segment (a rare occurrence).

- We recommend clearing your Substreams cache after this upgrade and reprocessing or validating your data if you use stores.

- Added

- Exposes a new intrinsic to modules: skip_empty_output, which causes the module output to be skipped if it has zero bytes. (Watch out, a Protobuf object with all its default values will have zero bytes.)

- Improves schedule order (faster time to first block) for Substreams with multiple stages when starting mid-chain.

- Matthew Darwin | Pinax posted in the chat: Also the firehose-ethereum 2.6.5 release:

- Fixes a bug in the blockfetcher, which could cause transaction receipts to be nil.

- Fixes a bug in Substreams where chains with non-zero first-streamable-block would cause some Substreams to hang. The solution changes the ‘cached’ hashes for those Substreams.

- More details and important steps for this release below under “Firehose Ethereum 2.6.5 Release.”

- Arbitrum-nitro New release v3.1.0 :

- This release includes critical updates for ArbOS 31, required for Arbitrum Sepolia by July 22, 2024, with additional improvements to the Anytrust DAS system, significant configuration changes, and enhanced system metrics, including support for auto-detection of database engines and new tracing opcodes.

- Ana from GraphOps confirmed they have tested this release on Arbitrum Sepolia, and it works with no issues.

Graph Stack

- Indexer Service & Agent: New release (pre-release) v0.21.4 :

- This release introduces a new command for receipt collection on indexer CLI, improves deployment management and logging, supports closing allocations on unsupported networks, and updates default subgraph settings, while also fixing several issues, updating documentation, and enhancing continuous integration support for Node.js.

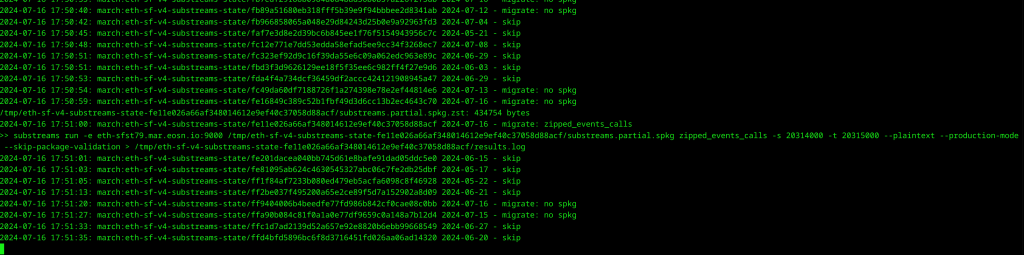

Firehose Ethereum 2.6.5 Release

This is not just a case of upgrading to the latest Firehose—there are some steps you need to take.

Matthew’s comments [Timestamp 6:22]:

For those with Substreams cache files, if you have an older version that created those cache files, they may not have the correct information, so they need to be deleted and then regenerated.

Deleting files and regenerating is not a big deal—however, if you have Substreams running live, and you don’t want to block it because you’re syncing a Substreams-powered subgraph, then you need to do a bit of work to regenerate the cache files without impacting the Substreams, then switch. Then, potentially rewind your subgraph.

Also, the latest Firehose Ethereum on 2.6.5 fixes a bug in the RPC Poller, so some Firehose blocks may not have all the correct information, and you’ll want to regenerate your Firehose blocks.

You may want to:

- Regenerate your Firehose blocks (only those generated with the RPC Poller).

- Then, regenerate your Substreams cache.

- Then, rewind your subgraph.

If you have a native integration (blocks are not generated by RPC Poller), these fixes will not affect you. The only Firehose blocks impacted are those generated using the RPC Poller.

The Substreams cache files are any chain. These are two different problems with two different fixes.

Derek | Data Nexus: Would pausing them first prevent a divergence?

Matthew: No, because you’re probably already running a version that has the problem. If you’re not running a version that has a problem, then yeah.

Substreams cache file storage

Matthew’s comments [Timestamp 12:31]:

Substreams allows you to keep multiple versions of cache files. There’s an extra parameter you can set in the Substreams configuration to tell it where to write the cache.

This is helpful for letting your existing Substreams run live while regenerating cache files behind the scenes. It depends on your specific setup how you’ll need to do this.

- Config: substreams-state-store-default-tag

By default, this is empty, but once you set this, it will put it at the beginning of the storage location. Substreams are cached by the module hash, so based on the module hash, it will now get whatever the tag is: </modulehash>. So if you set it to v1, your file name will be <v1/modulehash>.

There is a magical command line argument to Substreams to tell it to override the module hash:

X-Sf-Substreams-Cache-Tag

What I do is set up two different Substreams tier one nodes. One is the old one and one is the new one with the new module hash, reprocess the Substreams, and then away it goes. Otherwise, you’re going to need to pass the extra parameter to Substreams to tell it to use the different tag.

If you want all the .spkg files for the Substreams that have run, those are actually all in the cache. You can look at the old cache files and your .spkg file is there, so you can rerun it. You just need to pass this magical command line argument:

substreams run .... --skip-package-validation

This will allow you to use the .spkg file that’s contained within the cache.

Depending on when you upgraded to the version of Substreams that has a problem, the cache files that are dated before the upgrade are fine. You don’t have to reprocess the entire history; you can keep the original cache files and then just recalculate the other ones. So you may want to copy the cache files from the original location to the new location up to the point when you did the upgrade.

You’ll need to know when you upgraded, which hopefully you do. If not, anything after June 1 is suspect. Before June 1 is fine. If you haven’t upgraded for a while, you may not have a problem.

colin.ca from StreamingFast posted: What signaled the need to reprocess Firehose blocks on our end was this log in our Graph Node: block stream error Got a transaction trace with status UNKNOWN, datasource is broken, provider: firehose,

This was due to the transaction receipts not being fetched correctly. If your blocks got processed properly and you’re not seeing that log in your Graph Node anywhere, then you don’t need to reprocess.

Unfortunately, I don’t have context for the Substreams fix.

Matthew: You’d use the RPC Poller to generate Firehose blocks for chains like Avalanche that do not have native Firehose instrumented binary.

There’s a debug tool that you can run against your blocks to identify which blocks you need to regenerate. See Colin’s post just below for this tool.

colin.ca posted in the chat: The ad-hoc tool in the latest firehose-ethereum to check for blocks affected by the RPC Poller issue:

fireeth tools find-unknown-status <source datastore> <destination datastore> <start block> <end block>

This will write files like:

<merged_block_file_start_block>_<number_of_bad_blocks>.json

in the destination storage. To get more detail, the file will contain a list of the block numbers affected in the file.

If you need any help, feel free to DM Matthew Darwin | Pinax on Discord.

Matthew later posted in the chat: Quick Perl script I wrote to re-generate “corrupted” Substreams….

Protocol watch

Forum Governance

- Chain integrations:

- GIP-0067: Deprecate the L1 (mainnet) protocol

- Q: Will the deprecation of L1 break the indexer agent for those running in multi-network mode?

- Pablo: Unless we pause them, the L1 protocol contracts will keep working, so I don’t anticipate any issues with indexer agent in those cases. There just won’t be any gateway making queries. But my recommendation would be to close allocations and to remove all stake, delegation, etc. from L1, as those contracts won’t be monitored or maintained any more. Transferring everything to L2 before transfer tools are deprecated would be the best course of action imo.

- For more info, refer to the Forum post.

- Q: Will the deprecation of L1 break the indexer agent for those running in multi-network mode?

Forum Research

- Uniswap v3 position graph broken

- Juan: From what I could tell, it’s basically a bad indexer response, so the gateway not finding any indexers that are suitable for that query. Probably a transient error due to some technical difficulties with indexers or some rebalancing of allocations causing these issues. But as far as I can tell, it’s gateway-related, not subgraph-related.

Contracts Repository

Open discussion

Whiteboard Activity: Old-School Clinic Style (continued)

This session continued from last week, with open sharing of topics in the indexer community.

Note: Content has been lightly edited and condensed.

Abel asked: How has the recent transition affected people’s operations? Are there any tips or tools that people can share?

- Investing in monitoring and dashboards

Marc-André | Ellipfra shared: It’s good practice to monitor everything—the hardware and the services you offer. Monitoring subgraphs is relatively easy, but monitoring nodes might require more scripting. Substreams and Firehose need close attention. Make sure you have good dashboards and good automation in place to monitor everything.

Matthew Darwin | Pinax posted: Is anyone interested in having a specific tool/dashboard that monitors the state of all the chains (using Substreams)? We’re thinking of creating one.

Matthew elaborated: One of the challenges we have is identifying why Substreams or Firehose stop generating blocks. The usual answer is some upstream chain issue. Having more insight into what’s going on with all the chains is something we need to spend time on. We’re thinking about a tool that has links to all the different block explorers, the different statuses, endpoints where chains might post their status page, links to the Discord channels, and whatever else people might want.

This is just an idea. We’d like to see if people have features they’d like to see included in such a tool, or maybe people already have their own stuff in place.

Mickey | E&N: Since we have a status page, which sort of shows the health and outages of chains, what are your thoughts on integrating that with this potential dashboard? Or do you see them as two separate things?

Matthew: Could be integrated, absolutely. I think all those status pages have APIs. Or maybe this whole thing links into a status page. I haven’t thought that far.

Mickey: Are you thinking of using Grafana for your dashboard?

Matthew: Could. Not necessary. Grafana is great because it has many capabilities out of the box, so if we can do it all in Grafana, that’s probably a good thing. Otherwise, we could build a React web app or something.

Maybe we start with something simple, get feedback, and see where we go from there.

It’s a common problem our Ops team encounters all the time. We have about 50 blockchains, and there’s always one that has an issue.

Vince | Nodeify also posted a suggestion: Discord & Telegram bot webhook.

Integrated chains in The Graph Discord

Matthew: I think some work is going on to integrate the separate chain Discords with the Graph Discord server.

Mickey | E&N: Yeah, that work is in progress by Payne and Mitch.

If another chain has its own Discord server where it posts updates, we’ve enabled our Discord server to reflect those messages in one of our channels. So you won’t have to go and check a bunch of different Discord servers; you’ll be able to get those updates in The Graph’s Discord.

Matthew: That will be super amazing because I’m subscribed to a whole bunch of Discords, and the problem is finding the right channel in the navigation bar. There’s a hundred of them there.

Mickey posted: On a related note, we are also about to expose a new channel in our server that shows all the status page alerts. (So you wouldn’t even need to look at the status page anymore, you’d just see those alerts in one channel in our Discord server.)

Restoring a deleted subgraph

Derek | Data Nexus: On a separate topic, I had someone DM me today about doing an individual subgraph backup. I recall a while back, we had a scenario where we needed to do that. If memory serves, we created a new shard, copied it to an active shard, and then deprecated the new shard.

It would be nice to have a graphman integration for restoring a backed-up version of a subgraph and updating the appropriate subgraph tables, etc.

Derek elaborated: We take regular backups, but they’re of the entire database. Sometimes, you have a single subgraph that fails, or something happens, and you want to restore just one subgraph from your entire backup.

It’d be nice to have an easy integration of that because I remember we had that happen one time: we deleted a subgraph, and we needed it. We had to restore the whole database on a newly created shard, copy the intended subgraph to one of our active shards, and then later deprecate that new shard that we created.

It seems like that whole process could be automated and simplified in case you do have subgraphs that get deleted or they get corrupted for some reason. Maybe you remove a subgraph, and then later, you need that one for a graft base somewhere.

But having a simple graphman command where you can restore a singular schema would be helpful.

Indexer tools

Josh Kauffman | StreamingFast: We’ve been tooling up more, but I do things fairly manually in a spreadsheet. I’ve been looking at Vincent’s Indexer Tools, and it seems to do similar tasks to what I do, but I prefer the spreadsheet look over a website UI, and Colin’s built some stuff for us. As a non-technical guy, I can actually post my allocations and reallocations within Slack and have it run from there, and then we get progress updates that way. So trying to move some of the technical work from the engineers to the non-technical team.

Abel: What are some of the things you have on the spreadsheet, and how does it differ from Vincent’s Indexer Tools?

Josh: Fairly similar information to what you find on Vincent’s tools. I prefer working in a spreadsheet, and having all the rows a lot closer together makes it a little bit easier to view and go through. One thing Vincent has that’s great is the quick check on the status of what’s closable and what’s not closable at the moment, where I’ve tracked that more manually. But yeah, similar information, just a different view of that information.

How are indexers cleaning up old deployments?

Derek | Data Nexus: With 6,000 subgraphs now on the decentralized network, I used to use the curation station Telegram channel to get notified when versions get updated and new subgraphs get deployed. At this point, it’s unreadable because it’s flooded with stuff.

I’m trying to figure out better ways to see when subgraphs to which you’re allocated have upgraded, and whether or not you even need to keep some of those old subgraphs, so you’re not just filling up your storage with subgraphs from old versions.

Data Nexus has started to write some Postgres queries to identify deployments that are no longer the current version of a subgraph.

We use a query like this to identify N-1s that we’re allocated to:

[Change your SDG number and indexer address (replace sgd1370 with your graph-network-arbitrum subgraph)]

select

sm.display_name,

sdm.network,

sd.ipfs_hash,

(select max(cast(id as int)) from sgd182.epoch) - a.created_at_epoch as epochs_old,

a.allocated_tokens / 10^18 as allocated_tokens,

s.id

from sgd1370.allocation a

join sgd1370.subgraph_deployment sd on sd.id = a.subgraph_deployment and upper(sd.block_range) is null

join sgd1370.subgraph_deployment_manifest sdm on sdm.id = sd.ipfs_hash

join sgd1370.subgraph_version sv on sv.subgraph_deployment = sd.id and upper(sv.block_range) is null

join sgd1370.subgraph s on s.id = sv.subgraph and upper(s.block_range) is null

left join sgd1370.subgraph_meta sm on sm.id = s.metadata

where upper(a.block_range) is null

and a.status = 'Active'

and lower(a.indexer) like '0x4e5c87772c29381bcabc58c3f182b6633b5a274a'

and s.current_version != sv.id

and sv.id not in (select current_version from sgd1370.subgraph where upper(block_range) is null)

order by allocated_tokens desc;

I’m curious about what other people are doing for their cleaning-up phase.

Hau | Pinax: Doing it manually still, relying a lot on Explorer and Telegram. It’s time-consuming if it’s a lot.

What are indexers using to see where queries are happening?

Vince | Nodeify: What are people using to see where queries are happening atm? QoS subgraph? Graphseer?

Derek | Data Nexus: QoS

Vince | Nodeify: Same

Marc-André | Ellipfra: QoS subgraph, constantly pressing F5.

Derek | Data Nexus: Mostly looking at Yesterday+Today’s data, and joined with our subgraph.subgraph_deployment to see if we’re synced on the subgraph.

What are indexers using to view the QoS subgraph?

Vince | Nodeify: What are you using to view it [QoS subgraph]? Grafana, something else, custom frontend? pgAdmin? I use pg > Grafana.

Marc-André | Ellipfra: Personally, I use a Grafana jet dashboard with a few GraphQL queries, but I find this suboptimal because it’s hard to join the data with other subgraphs. So I think a better solution is probably either scripting it or, as Derek is doing, running SQL queries directly into the imposed grafts on the subgraph.

Derek | Data Nexus: pgAdmin on my end, but planning on putting together a Grafana dash with a few panels for Mike’s benefit when doing some of our allocations:

- Active query subgraphs

- Stake ratio approaching 10

- N-1 identification

How are indexers checking how long a subgraph may take to sync?

Josh Kauffman | StreamingFast.io: I’ve just recently started checking subgraphs before I allocate to them for the first time to see if they’ve had any 0x0s [POI] [Proof of Indexing unavailable] on them in the past (as a signal that they are too long to sync). Anyone doing something similar, or have any good tricks along that line?

Derek | Data Nexus: We mostly check entity counts. Entity counts are probably a closer metric to see how long something is going to take. Both could be cross-referenced.

Marc-André | Ellipfra: A lot of 0x0 is probably a thing to check, but you’ll get it only on old subgraphs.

Jim | Wavefive 🌊: Entities + off-chain sync always now. Stopped allocating to subs I’m not synced on.

Vince | Nodeify: ^ haven’t given it time for people to wave the white flag.

Derek | Data Nexus: Started this as well [in reference to entities + off-chain sync].

How are indexers scaling up operations to support the growing network of subgraphs?

Abel asked: What are you doing to scale up your operations to support the increasing number of subgraphs on the network?

Marc-André | Ellipfra shared: In my workflow, I’m currently doing a lot of manual checks and operations on every single subgraph, both for allocation and unallocation. It’s still working, but if we’re going to 10X the number of subgraphs again or 100X, I’ll have to revamp my whole workflow to basically automate everything and only act on exceptions, handling the 1% of subgraphs that will require manual intervention and automate the other 99%. I’m very far from that point, but yeah, we need to massively automate.

Jim | Wavefive shared: We’re also at the point where if an indexer has been running with monolithic infrastructure up until this point, they need to reassess their tech stack. My stack is certainly monolithic right now. It’s all on one very big, very powerful, very fast server. I’m off-chain syncing as much as I can within my own sort of filter of what I think is worth syncing, but I’m really pushing the boundary of what that one server can do, even with the fastest storage you can buy. The cracks are starting to show. As soon as you start moving into the multiple hundreds of subgraphs, you need to split the stack up and start moving to smaller stacks. Docker, bare metal, cubes, Linux containers, whatever you choose, you need to modularize. Otherwise, you’re not going to be able to serve anything beyond 500 subgraphs. Your QoS will be terrible.

stake-machine.eth: Running a separate Postgres for blockchain data, separate for subgraph data, running an indexer-service on top of subgraphs Postgres with query-node running on the same server with subgraphs data to lower latency. Ansible ftw.

Vince | Nodeify: Argo Workflows – The workflow engine for Kubernetes

Abel: There’s going to be a Launchpad session coming to IOH soon.

Matthew Darwin | Pinax: Future Firehose tooling is on Launchpad. Pinax is helping GraphOps on this.

Reach out to InfraDAO with tool ideas

Slimchance: For Indexers excited to build tools and improvements for the entire Indexer space, InfraDAO may also support with resources and bounties. If you have a great idea, feel free to reach out in my DMs.

No Comments