TL;DR: The open discussion focused on Kubernetes, led by Guillaume and Matthew from Pinax. They covered topics such as testing Kubernetes apps with kind, bootstrapping clusters with FluxCD, and using Helm with Flux to deploy Launchpad charts. The discussion also touched on bridging LXC containers to Kubernetes and using Crossplane for managing external endpoints.

Opening remarks

Hello everyone, and welcome to episode 180 of Indexer Office Hours!

GRTiQ 191

Catch the GRTiQ Podcast with Ben Huh, a pioneering entrepreneur, co-founder, and investor.

Ben is the visionary behind the massive success of Cheezburger, the internet sensation that transformed memes and user-generated content into a business powerhouse.

Repo watch

The latest updates to important repositories

Execution Layer Clients

- sfeth/fireeth: New release v2.7.5:

- Fixed data corruption in set_sum store policy operations, requiring cache deletion; improved development-mode request startup times.

- Nethermind: New release v1.29.1 :

- This version is a mandatory validator upgrade addressing Linux memory regression, OP Stack sync performance issues, and a Gnosis chain block production edge case.

- Avalanche: New release v1.11.12 :

- Optional but strongly encouraged update with numerous improvements, including ACP-77 fee calculations, OP Stack sync optimizations, and enhanced P2P handling; plugin version remains at 37.

Consensus Layer Clients

Information on the different clients

- Prysm: New releases:

- v5.1.1 :

- Recommended update that turns on experimental state management by default with opt-out flag and includes improved bandwidth stability via libp2p updates. This release deprecates gRPC gateway in favor of beacon APIs.

- v5.1.2 :

- Fixed panic recovery in beacon API streaming events endpoint affecting v5.1.1 REST mode validators and third-party integrations; critical for those using beacon API instead of gRPC.

- v5.1.1 :

- Teku: New release v24.10.2 :

Graph Stack

- Indexer Service & Tap Agent (RS): New releases:

- indexer-tap-agent-v1.2.0 :

- Improved error handling with backoff moved to tracker.

- Enhanced performance through concurrent RAV requests and optimized fee calculations using latest_rav.

- indexer-tap-agent-v1.2.1 :

- Includes bug fix to use INFO as default level for logs.

- indexer-tap-agent-v1.2.0 :

Matthew Darwin | Pinax posted: Firehose upgrade in progress at Pinax.

Firehose in the wild!

Protocol watch

The latest updates on important changes to the protocol

Forum Governance

Matthew Darwin | Pinax: Johnathan | Pinax is busy… EVM RPC for Chiliz

Johnathan | Pinax: Pinax supports Chiliz already. Firehose Substreams will be supported soon.

Contracts Repository

- chore(deps): bump secp256k1 from 4.0.3 to 4.0.4 #1063 (open)

- fix: added missing parameters to deploy script and reduced runs to 50 for SubgraphService #1062 (open)

- fix(Horizon): added missing parameter for TAPCollector deployment #1061 (closed)

Open discussion

Kubernetes Demo & Office Hours [9:11]

Continued! Kubernetes Office Hours in more detail led by Guillaume & Matthew from Pinax.

- Testing Kubernetes apps with kind

- Bootstrapping a cluster with FluxCD

- Using Helm with Flux to deploy launchpad-charts

Guillaume: I’m going to demo things a bit slower this week. Hopefully, you had the time to look at the Kubernetes IOH demo repo and play around. If there are any questions, please ask.

As a refresher from last week, this repo is a starting point to play with Kubernetes in a simple way. The ecosystem is moving toward Kubernetes, so having this deployed instead of a bunch of Docker Compose files is far more scalable.

From the chat:

Matthew Darwin | Pinax: Marc-André | Ellipfra, any k8s questions. 😉 (I saw you were asking Launchpad questions)

Marc-André | Ellipfra: I’m listening! Looking for a solution for the bleeding edge chains. Will probably have to learn to build Helm charts and contribute back.

chris.eth | GraphOps: Who hurt you and why did you choose Kubernetes to cope with it?

Vince | Nodeify: I’ll be contributing to those soon. 🙂

Matthew Darwin | Pinax: Vince | Nodeify and Johnathan | Pinax are hopefully going to help with that.

kind [10:50]

kind is a tool for running local Kubernetes clusters using Docker container “nodes.” kind was primarily designed for testing Kubernetes itself, but may be used for local development or CI.

Guillaume: kind is not production-grade, but it is really useful for developing, trying things out, and deploying a Kubernetes cluster in the simplest way possible. The only thing you need is Docker installed on your machine, and then it will create the control plane, the worker nodes—all the Kubernetes components in a Docker container, and you can create multiple nodes by setting up multiple Docker containers. Then you can replicate a whole cluster in your local environment, and you can do a bunch of things with that from your kind cluster, like external load balancing, ingress, and local DNS.

At Pinax, we set up our own DNS provider for services, so I was able to use kind to bootstrap a DNS infrastructure inside of kind and then use it to test for services. Then, we can deploy this to production.

Setting up a cluster is really easy [12:09].

Everything is deployed from a tiltfile, you can think of it as a makefile but specifically aimed at Kubernetes. This way, if you have an application you want to code and you want to have live updates inside of a cluster, you can use a tiltfile to reload and recompile your app and update your kind cluster automatically.

The kind cluster config is basically just a YAML file, and you set up a bunch of things. Watch at 12:48.

Watch Guillaume create a cluster at 13:48.

Tools that kind will deploy for your cluster:

- Controller manager

- Kube scheduler

- API server

Usually, it would also deploy the internal DNS. kind uses flannel to do the container network interface (CNI), the tool that is able to link pods and containers inside of your cluster, so if one pod wants to send a request to another pod running in the cluster, they need to be able to communicate with each other. Also known as kube-dns or CoreDNS.

In this demo, we have requested to disable the CNI because we don’t want to use flannel. We want to use Cilium.

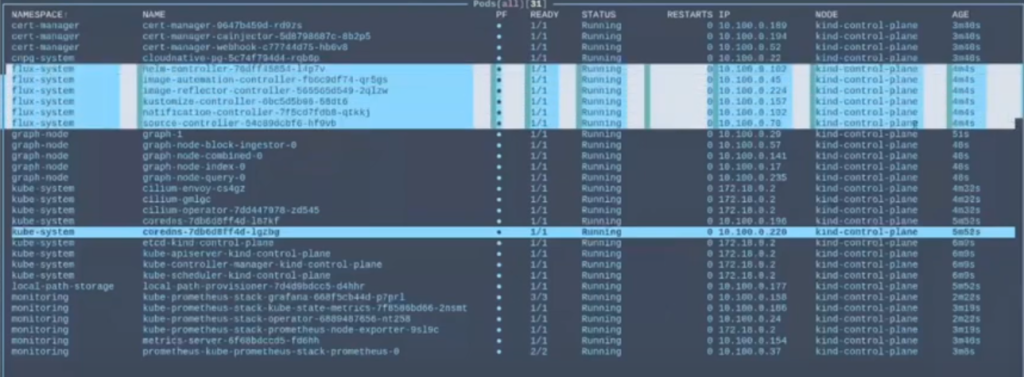

Watch what deploys from the tiltfile at 15:40.

In this demo, we use Flux for the continuous deployment (CD) in the cluster.

The cluster waits for the CNI and the local storage provisioner because the local path provisioner needs the CNI in order to provision storage on your machine. So with the tiltfile, we deployed Cilium, so Cilium is using cilium-envoy, cilium-operator. When it’s deployed, then CoreDNS and the local path provisioner will work, so from there you have a fully operable Kubernetes cluster.

Matthew: Why would you want to choose Cilium?

Guillaume: Because Cilium can do cluster mesh, which is a way to have services between clusters across data centers. This is something we need at Pinax because we have multiple data centers, and we want services to be accessible from one cluster to another without using some sort of WireGuard tunnel, which is slow. Cilium doesn’t use WireGuard tunnel—it uses eBPF at the core, so it hooks directly into the Linux kernel and you can inject code directly in the kernel to reroute your packets across data centers. It’s much faster and more flexible than just a tunnel.

I think at this point in the Kubernetes world, Cilium is like the gold standard of Kubernetes and CNI. Pretty much every cloud provider is moving toward Cilium.

From the chat:

c.j. | GraphOps: Isn’t the cluster mesh established with tunnels between clusters? That Cilium may use IPSec or WireGuard for? I think it is, but can be wrong.

Guillaume: Not sure, it’s been a while since I played with cluster mesh.

Vince | Nodeify: You can with this automagically: Talos KubeSpan [Learn to use KubeSpan to connect Talos Linux machines securely across networks.] Automagic WireGuard.

Matthew Darwin | Pinax: Everything Talos, according to Vince. Abel | GraphOps, sounds like a future demo from Vince. 😉

AbelsAbstracts.eth | GraphOps: Yes, sir, Vince and I are already in talks 😎 He’s going to be presenting on the 5th of November. 🥳

Flux [20:10]

There’s also Flux that was deployed. We have a namespace here: flux – system.

Flux deployed a bunch of controllers: helm-controller, image-automation-controller gives you automatic updates, image-reflector, which is also part of the image-automation-controller—these two work together.

Then you have the kustomize-controller, which allows you to create deployments from customizations.

You can tell the notification-controller to notify you on Discord or Slack when there’s an update. You can have webhooks with the notification-controller, so you can track and have alerts for every deployment or if there’s a new version of an app.

The source-controller pulls GitHub or Helm repos or any container image repository that your applications are going to use or even where your applications are stored.

We chose Flux at Pinax because it’s easy from an operator point of view. It’s straightforward to bootstrap, play around with, and extend as it’s made to be simple and unopinionated. It does one thing: the CD (continuous deployment), but it’s easily extendable. You can use Flux to do multiple clusters with multi-tenancy.

There’s also Argo, which is similar to Flux, but is more opinionated with an all-in-one toolkit rather than being able to extend and use different solutions for different parts of the whole cluster. The main advantage of Argo is its nice UI, whereas the Flux UI is kubectl commands and tools.

You can use them together. Say you want to have 500 clusters to reconcile; you can use Flux for the infrastructure and then manage your applications and tenants with Argo.

From the chat:

Vince | Nodeify: You can manage 1,000 clusters with one Argo instance.

calinah | GraphOps: But have you tried? Cuz I’m getting a headache just thinking about it.

Vince | Nodeify: I’ve done three in homelab, it’s nice. 1,000 sounds terrible.

Matthew Darwin | Pinax: Need more hardware.

Vince | Nodeify: I’d rather make cluster beefier than make another, lol. But good for prod, stage, dev.

calinah | GraphOps: Depends on the use case – at some point, beefier is not enough. Having something like Argo for managing multiple clusters is great – as long as we’re not talking about 100s of clusters.

Vince | Nodeify: Yes, especially nice because it can abstract things into projects, so you can manage teams within the same cluster and see what teams are doing what, etc., and for those that don’t want to worry about scaling and like spending money to do less, there is Akuity: End-to-end GitOps platform for Kubernetes. Deploys Argo, with good ol’ unlimited compute.

calinah | GraphOps: Have you played with Akuity?

Vince | Nodeify: Yes, I’ll be using it on my own infra.

Kubernetes IOH demo repo [23:27]

From a user or developer’s point of view, this repo is used to reconcile the cluster and the applications running in the cluster. So this is used by Flux to reconcile basically everything that’s running in the cluster aside from the CNI, which is Cilium.

Follow along as Guillaume explains how the repo is structured and the different parts at 24:50.

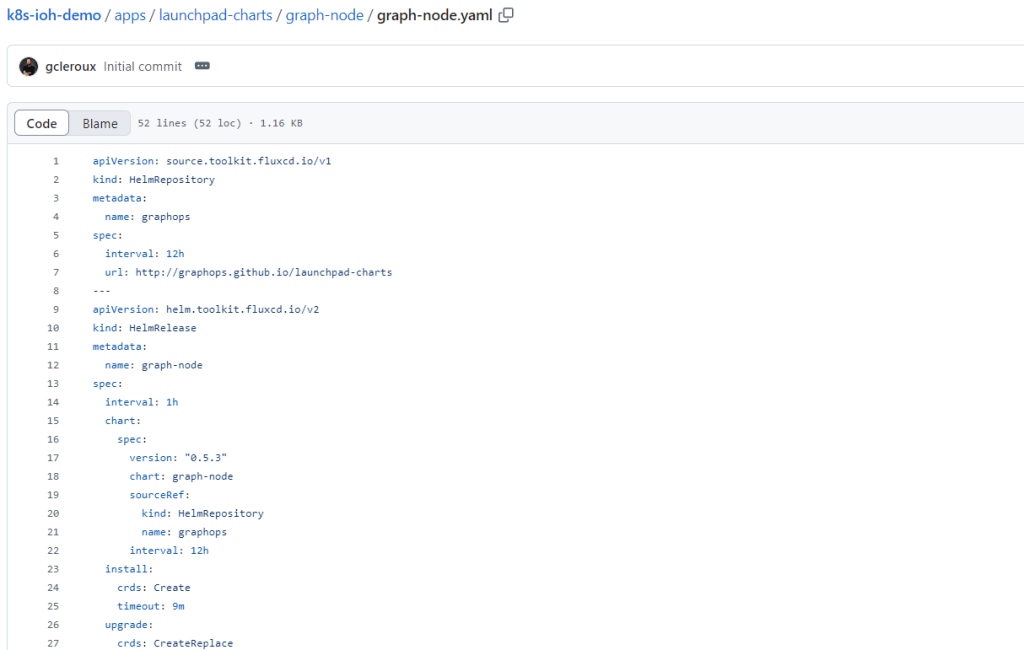

Helm charts [38:34]

You can use Helm charts to deploy Graph Node and you can use this as part of Flux. So if you look at the Graph Node customization, it’s going to try to deploy everything that’s running in this directory, so apps, Launchpad, Graph Node. It’s going to grab this customization and create the namespace graph-node if it doesn’t already exist, and then deploy this file here, which is the graph-node.yaml, which is basically a Helm repository.

The Helm repository’s name is graphops, and it pulls from the repo every 12 hours for all launchpad charts to stay up to date.

Then we create the HelmRelease, which is deploying the Helm chart in an application in your cluster. We’re deploying a Helm chart called graph-node.

From the chat:

Vince | Nodeify: KubeVision gives you a Kube ChatGPT that can see into your cluster. 🙂

$59 / month for unlimited, one less thing to worry about, and Kargo handles promotion really well.

calinah | GraphOps: This looks great, but it only seems available if you use Akuity and you can’t get it standalone?

Vince | Nodeify: Kargo should work with any Argo, not sure about KubeVision.

Matthew: Even if you don’t agree with all of the configuration that we have at Pinax, maybe it gives you some other ideas and brings up questions or things to think about. I’d love to hear more about what other teams or people are doing around Kubernetes.

Vince | Nodeify: Reloader is awesome. Please tell me you’re all using GitOps, no matter the flavor, those who have taken the pill.

- c.j. | GraphOps: +1 for GitOps btw, Git really does the whole version control part really well.

Marc-André | Ellipfra: Definitely learned about additional things I could be doing with my clusters. Thanks for that.

Vince | Nodeify: If you’re on Argo, check out the additional things available. Lots of tools and plugins.

Marc-André | Ellipfra: Yeah, I’m probably using only 1% of Argo CD.

Vince | Nodeify: This made things nice in the indexing world for me: Sync Phases and Waves.

Launchpad charts

chris.eth | GraphOps: All the officially supported Charts in Launchpad

Pierre | Chain-Insights.io: Use Lens instead of K9s (Lens IDE for Kubernetes).

stake-machine.eth: I know Lens, but still… 🙂 you can’t buy true love.

Marc-André | Ellipfra: Yeah, and for our non-Kubernetes friends, you don’t need to start with something as comprehensive as what Pinax is doing. you can start super small, using a cloud service, and then move to your own hardware.

Vince | Nodeify: Also, if you have multiple clusters, kubie is a must. Makes life very easy. kubectx

stake-machine.eth: Proxmox

Bridging LXC containers to Kubernetes [52:28]

Matthew: Now, at Pinax, we’re at the state where we have stuff in Kubernetes and stuff not in Kubernetes, and now we need to bridge the Kubernetes stuff with the non-Kubernetes stuff. So that’s a whole new thing that we’re looking at: how do we make the transition from old style to new without downtime.

All of our stuff was originally deployed in LXC containers. Most things are still in LXC actually, like Proxmox, and now we’re deploying Kubernetes clusters, and we’re thinking of putting the control plane nodes maybe in Proxmox.

Guillaume, what were you looking at in terms of bridging the LXC containers to Kubernetes?

Guillaume: Well, I’m going to break the scoop now, because it works as of yesterday. What we’re doing right now is we have our own DNS provider inside of Kubernetes, and we use Crossplane to be able to provision and monitor some external endpoints outside of the cluster. We have a bunch of applications running in LXC that are outside of Kubernetes, but we want to use the infrastructure DNS provider inside of Kubernetes for those services, so we can keep those services but migrate them to the cluster at some point… or not. We want to have the freedom to choose what we do, so we’re using Crossplane to create services with endpoints to stuff that’s running outside of the cluster, but still being able to reconcile from GitOps and from inside the cluster itself the services that are running outside.

Pierre | Chain-Insights.io posted: KubeVirt

Guillaume: Yeah, KubeVirt is also really interesting. I’ve played with it a fair bit to set up a Kubernetes cluster and it’s pretty great. If you have some VMs that you want to manage in Kubernetes, you can use KubeVirt to sort of scale, upgrade, and manage your VMs as Kubernetes pods.

TAP agent [46:17]

John K.: Not K8s, but I have a question about tap-agent. Do I need to add this address to the [tap.sender_aggregator_endpoints] config?

Ana responded: You don’t need to add that address. That’s just an additional gateway that someone set up. Right now, the only officially supported gateway is the Edge & Node one, but the tap-agent will show any gateway that exists.

No Comments